What can credentialing organizations do in order to maintain the integrity of their assessments? Dr. Chris Beauchamp provides advice on how to maintain confidence in the assessment process.

Test misconduct is one of the greatest threats that credentialing organizations face when designing and delivering high-stakes assessments. Candidate misconduct is not only becoming more common; it is also becoming more accepted in the public eye. The purpose of this blog post is to identify what credentialing organizations can do before, during, and after delivering an examination in order to maintain the integrity of their assessments. In doing so, this post aims to provide advice on maintaining confidence in the assessment process; and, by extension, the professions that credentialing organizations serve.

Prevalence

Test misconduct appears to be increasing across several assessment sectors. In a literature review by Cizek (1999), 73% of undergraduate test-takers admitted to cheating at least once during the course of their studies. This number is sharply higher than it was in the 1940s, in which only about 20% of undergraduate students admitted to cheating. Publicized cases of test misconduct are also becoming more common in graduate school (across fields of study); as well as within the high-stakes credentialing world.

At the same time, society in general is growing increasingly complacent about the prospect of cheating. In a survey of undergraduate and graduate students, over 80% of respondents stated that they would not report test misconduct – even if they witnessed it first-hand.

Despite these trends, credentialing organizations must remain proactive and vigilant if they are to ensure the integrity of the assessment process; and by extension, maintain confidence in the many and varied professions that they serve as ‘gatekeepers’ for.

Preventing Test Misconduct Before the Test

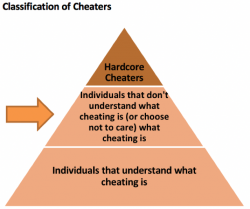

Not all cheaters are created equal. The most extreme are “hardcore cheaters”, who engage in test misconduct for malicious or financial purposes. It is admittedly difficult to prevent test misconduct among this group. However, the majority of test-takers who engage in test misconduct are not hardcore cheaters. Most are individuals who: 1) do not understand that what they are doing is not acceptable; or, 2) succumb to temptation during the examination process, and then engage in behaviour that would normally be out of character. This (much-larger) group is more amenable to change.

Among this group, security messaging is the single most effective tool to discourage test misconduct before it happens. When providing security messaging, it is important to ensure that messages are woven throughout every aspect of the testing process – it often takes several repetitions before a message sinks in. This isn’t difficult to do; security messaging can be effectively communicated across various media, such as candidate handbooks, the credentialing organization’s website, videos, at events, and on social media.

In addition to providing security messaging for candidates, credentialing organizations should also provide security messaging for subject matter experts, as well as for staff members who have access to examination materials. When working with these individuals, a security or confidentiality agreement is vital. This document should contain information on what specific behaviours are prohibited; what sanctions may be imposed; and, any additional requirements surrounding the disclosure of conflicts of interest.

In short: whether you’re dealing with candidates, subject matter experts, or staff , it is important to be clear about what is acceptable versus not acceptable. The goal of this is relatively simple: let people know that you’re serious about test security.

Preventing Test Misconduct During the Test

One of the most well-understood strategies for discouraging test misconduct is related to test administration and proctor guidelines. Most organizations understand that a well-trained and vigilant proctor can prevent most types of test misconduct from occurring. However, there is almost always room for improvement. To that end, the National College Testing Association (NCTA) has developed a list of widely-used standards to help discourage misconduct during test administration:

Test Centres

The following guidelines will enhance test security in both paper-and-pencil test centres, as well as computer-based-testing test centres:

- Test handling and storage procedures should be documented and followed rigidly. This includes physical and/or electronic storage of test materials.

- The test centre must be locked during off-hours; and should have a physical layout that is conducive to secure administration. This includes minimum spacing between work stations (e.g., 1 metre), or physical partitions between candidates.

- The proctor(s) should be able to observe the candidates at all times. This includes direct observation, as well as monitoring through a closed-circuit system.

- Test centre policies and procedures should be evaluated on an on-going and systematic basis.

Proctors

Proctors are individuals who are responsible for the safe administration of the test, and who provide oversight for testing activities. All proctors should be trained in general test security awareness; as well as in program-specific policies and procedures. Proctor to candidate ratios (e.g., 1 proctor per 15 candidates) should be established in advance. Regardless of the number of candidates, there should always be a minimum of two proctors.

A “Tip Line”

Many organizations have had good success using anonymous “tip lines” for suspected misconduct. These tip lines operate in a way that’s similar to Crime Stoppers– individuals (e.g., other candidates) are able to report observed or suspected cases of test misconduct using a toll-free number or online form. In many versions of the tip line, candidates have the option to include their name and contact information; but can also anonymously report information.

Detecting Test Misconduct After the Test

Data Forensics

Data forensics is used in a number of settings. But regardless of the setting or the complexity of the approach, the general concept is relatively simple: establish a baseline of “normal” behaviour; and then identify instances where a candidate’s behaviour deviates from this baseline.

One specific type of data forensics involves detecting collusion (e.g., one candidate copying from another during the test). In collusion detection, the metrics generally involve detecting and flagging instances where a pair of candidates have an unusually-high number of answers in common when compared to all possible pairs of candidates. For example, if it was determined that an expected number of common answers between any given pair of candidates is 60 on a 100-question test, a pair of candidates may be flagged if they have 95 common answers.

The word “flagged” is used here intentionally. Candidates who are identified through a collusion detection process should be examined more closely (e.g., considering seating plans, proctor observations, and irregularity reports) before making a conclusion about their guilt. Remember that a flag does not conclusively indicate that the candidates necessarily cheated. Instead, it indicates that deeper investigation is required.

Last year, I hosted a webinar that focused specifically on collusion detection and data forensics. For a more in-depth discussion of this topic, please ici.

Administration scorecards

As part of an ongoing test security program, post-administration scorecards should be developed. These scorecards should: 1) grade the actual administration; and, 2) identify trends over time.

Not all incidents are equal in severity. Therefore it is helpful to also develop a classification system for administration incidents. One possible classification system is as follows:

Level 1: Minor incidents (e.g., proctor had to call the credentialing organization to confirm or clarify a policy).

Level 2: Minor protocol breach (e.g., a candidate or proctor did not correctly follow a policy on washroom breaks).

Level 3: Major protocol breach (e.g., a candidate left the test centre without following the full exit procedure).

Level 4: Critical protocol breach (e.g., a candidate left the test centre with a copy of the test).

Conclusion

Test security is often like the carnival game “Whack-a-Mole.” As test security incidents are dealt with, candidates develop new and inventive ways to attempt to circumvent the system. As a result, it is important that credentialing organizations not only review and solidify their current processes, but also continue to monitor new trends in the industry.